As Ofcom investigates Elon Musk’s X over Grok deepfakes scandal we ask: could this be the end of X in the UK?

There’s no doubt about it; the AI genie is well and truly out of the bottle.

Whether you’re terrified or utterly ecstatic about the endless possibilities its creation presents, one thing is for certain: our digital future depends on how responsibly and ethically it’s developed.

For many, tools such as ChatGPT have made for handy lifestyle companions, offering support with anything and everything from interior design inspiration, mediating household disagreements and even helping to transform the few ingredients left in the fridge into delicious, nutritious meals.

AI is being used within the medical field to facilitate earlier detection of certain cancers, helping to save the global bee population, and is even informing critical action on climate change – all developments worth celebrating.

But unfortunately, it’s not all good news.

‘Weapons of abuse’

This week, UK watchdog Ofcom has launched an investigation into Elon Musk’s X, over concerns that its AI tool, Grok, is being used to create ‘deepfakes’ - realistic AI-generated media that manipulate a person’s likeness, often for malicious purposes.

A statement released by Ofcom revealed there had been “deeply concerning reports” of the chatbot being used to create and share undressed images of people, as well as, sickeningly, sexualised images of children.

For many fans of digital creators, seeing someone they follow online on national television creates connection and curiosity. But what about the fans who aren’t already engaging with mainstream media?

With reality shows like “Strictly Come Dancing” injecting fresh personalities such as George Clarke into their line-up, TV is becoming more social media savvy in an effort to meet younger audiences where they already are.

These shows are also dialling up their own social presence to foster that connection. With a combined TikTok and Instagram following of 1.9M, Strictly Come Dancing, traditionally beloved by an older generation, is planting itself firmly inside Gen Z’s feed.

This strategy keeps younger viewers aware and engaged, despite their famously short attention spans, ensuring the show stays top-of-mind for scrollers even if they’re not tuning in for the full hour-long episodes.

What can be done to keep X users safe?

If Ofcom finds that the platform has indeed broken the law, it can potentially issue X with a fine of up to 10% of its worldwide revenue, or £18million, whichever is greater.

However, Tech Secretary Liz Kendall, along with Prime Minister Sir Keir Starmer, have gone further than a simple regulatory review of the reports. They have said “all options are on the table”, including a total block on access to X in the UK if the platform fails to comply with legal standards on harmful content.

Initially, Elon Musk reportedly said that “authorities want any excuse for censorship”. Meanwhile, UK leaders have described deepfakes as “weapons of abuse”, labelling them “disgusting and unlawful”.

At the same time, the government is accelerating laws to make the creation and dissemination of non-consensual deepfakes a criminal offence. Platforms facilitating this could be held liable, incurring hefty fines of up to 10% of global revenue.

The future of X in the UK – will Bluesky take over?

While the government hasn’t ruled out a ban on accessing X in the UK, many are already choosing to boycott the platform, migrating over to Bluesky, where users are encouraged to unleash their creativity and “have some fun again”.

With detailed community guidelines around principles such as safety, respect, authenticity and legal compliance, Bluesky has created a baseline expectation for safe and respectful online interaction, providing a space for individuals and business alike to post, scroll and share freely, without the fear of harmful AI interference.

The importance of ethical AI use

This week’s news reflects broader anxiety about AI misuse and online safety, and underpins the importance of accessing information from trusted, ethical sources.

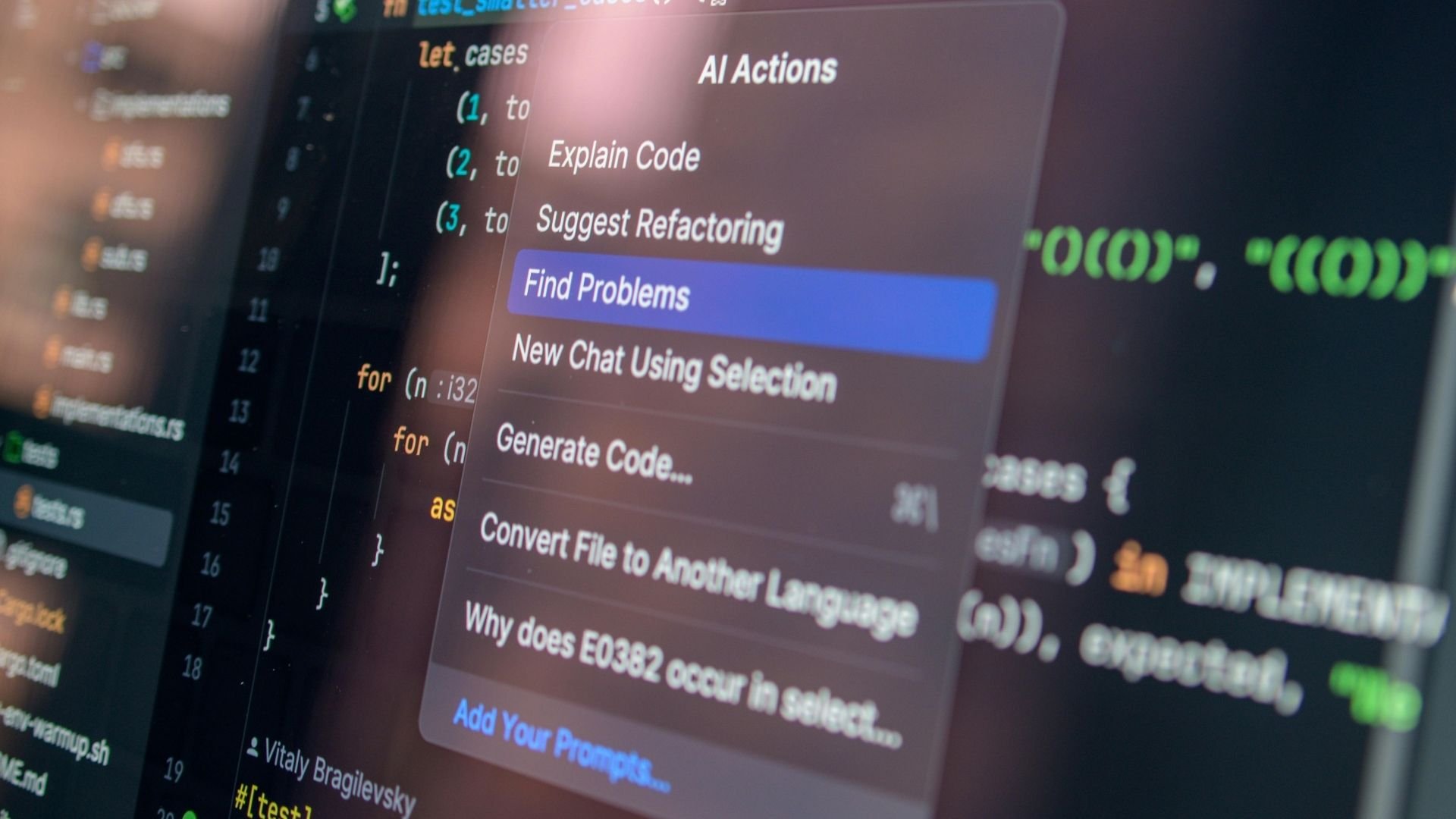

Here at Democracy, we’re paving the way for safe and ethical AI use and we strive to empower our clients to do the same, ensuring our outputs are credible, accurate and reliable.

How we’re winning with AI in 2026

Authenticity is one of the core values at the heart of all we do. Whether we’re undertaking the day-to-day of life at the agency or enhancing our proposition, showing up as our authentic selves and delivering meaningful, reliable content that will generate tangible results for our clients is simply non-negotiable.

We understand the importance of AI and will never underestimate its potential. This is why we treat AI tools like key stakeholders. We establish a baseline, allowing us to identify misinformation, which in turn empowers us to build a solid visibility strategy built on the foundations of consistent, authoritative narratives for ourselves and for our clients.

If you’d like to learn more about how we’re utilising AI to supercharge our clients in 2026, drop us a line.

Sources:

https://www.bbc.co.uk/news/articles/cwy875j28k0o